val trend = Range.Double(0.0, 1.0, 0.01).flatMap(i => Seq(i, i))

val flat = Range.Double(0.0, 1.0, 0.01).flatMap(i => Seq(i, 0.3))

val f = Sigmoid

val net = Network(Vector(trend.size) :: Dense(25, f) :: Dense(1, f) :: SquaredMeanError())

net.train(Seq(trend, flat), Seq(->(1.0), ->(0.0)))

[INFO] [12.01.2016 12:52:33:853] [run-main-0] Took 61 iterations of 10000 with error 9.965761971223065E-5

Weights: 5025

[success] Total time: 118 s, completed 12.01.2016 12:52:33

Flat Result: DenseVector(0.010301838081712023)

Linear Trend Result: DenseVector(0.9962517960703637)

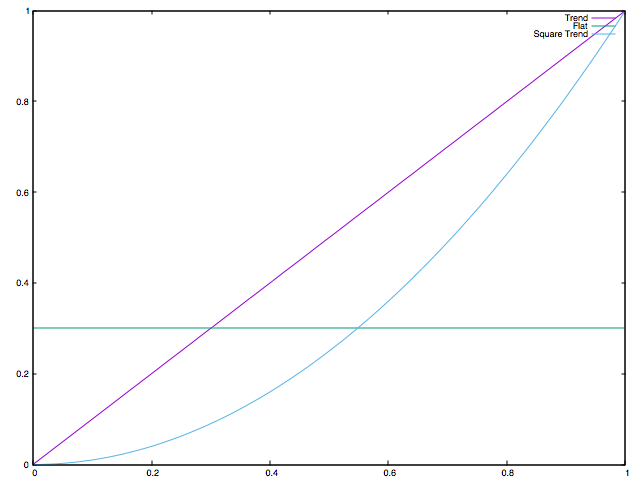

val squareTest = Range.Double(0.0, 1.0, 0.01).flatMap(i => Seq(i, i * i))

Square Trend Result: DenseVector(0.958457769839082)

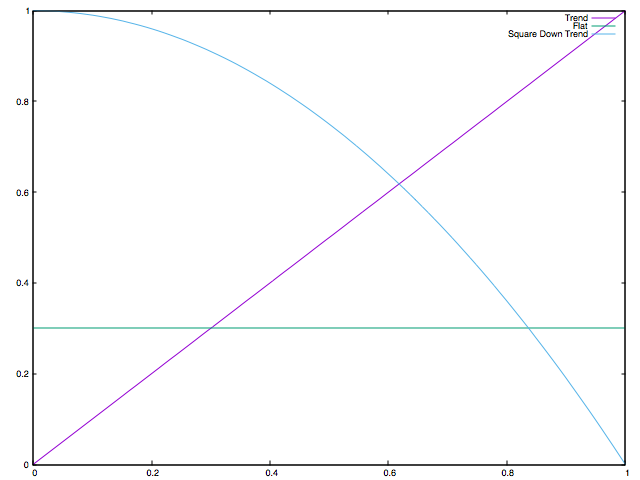

val declineTest = Range.Double(0.0, 1.0, 0.01).flatMap(i => Seq(i, 1.0 - i))

Linear Decline Trend Result: DenseVector(0.0032519862410505525)

val squareDeclineTest = Range.Double(0.0, 1.0, 0.01).flatMap(i => Seq(i, (-1 * i * i) + 1.0))

Square Decline Trend Result: DenseVector(0.011391593430466094)

val jammingTest = Range.Double(0.0, 1.0, 0.01).flatMap(i => Seq(i, 0.5*Math.sin(3*i)))

Jamming Result: DenseVector(0.03840459974525514)

val heroZeroTest = Range.Double(0.0, 1.0, 0.01).flatMap(i => Seq(i, 0.5*Math.cos(6*i) + 0.5))

HeroZero Result: DenseVector(0.024507592248881733)

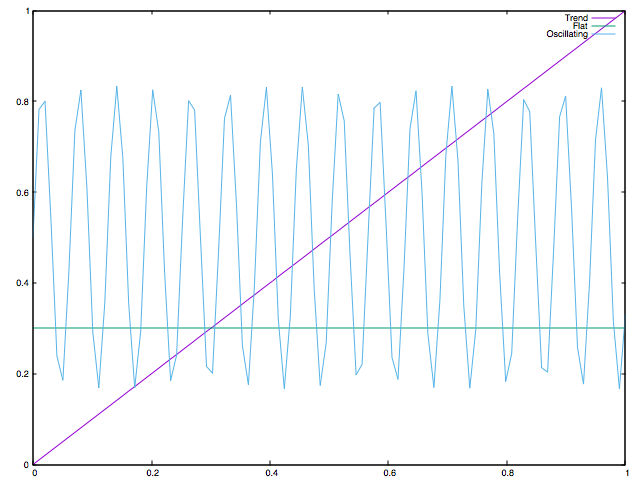

val oscillating = Range.Double(0.0, 1.0, 0.01).flatMap(i => Seq(i, (Math.sin(100*i) / 3) + 0.5))

Oscillating Result: DenseVector(0.0332458093016362)

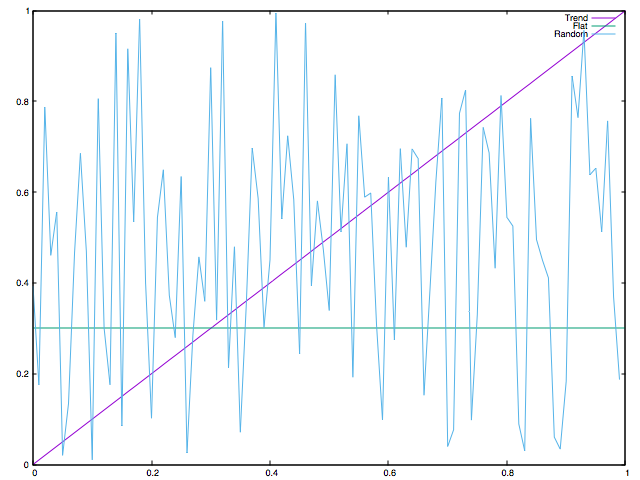

val random = Range.Double(0.0, 1.0, 0.01).flatMap(i => Seq(i, Random.nextDouble))

Random Result: DenseVector(0.3636381886772248)

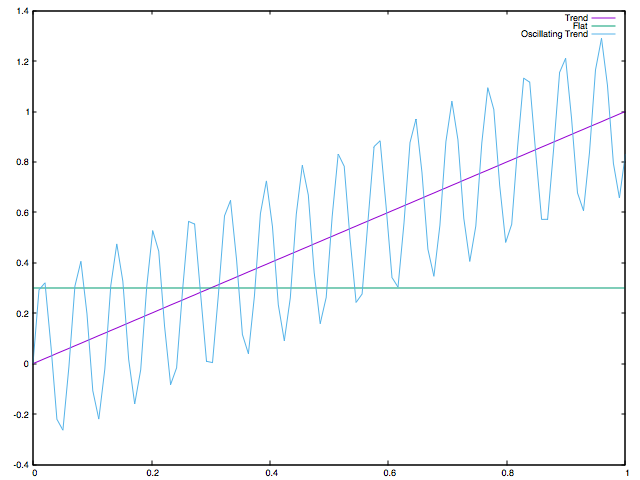

val oscillatingUp = Range.Double(0.0, 1.0, 0.01).flatMap(i => Seq(i, i + (Math.sin(100*i) / 3)))

Oscillating Up Result: DenseVector(0.9441733303039243)

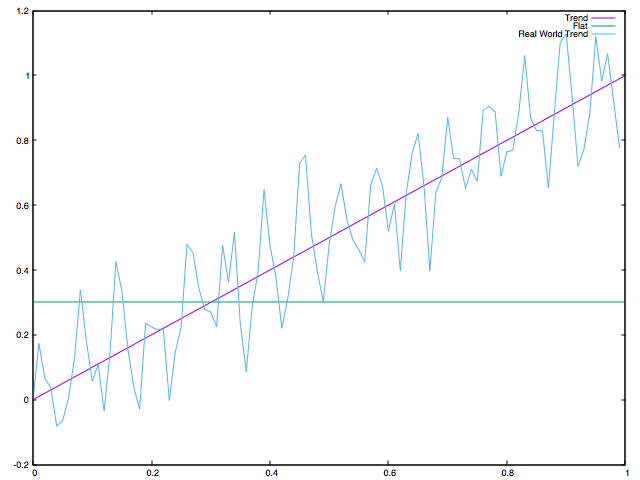

val realWorld = Range.Double(0.0, 1.0, 0.01).flatMap(i => Seq(i, i + (Math.sin(100*i) / 3) * Random.nextDouble))

Real World Result: DenseVector(0.9900808890038473)

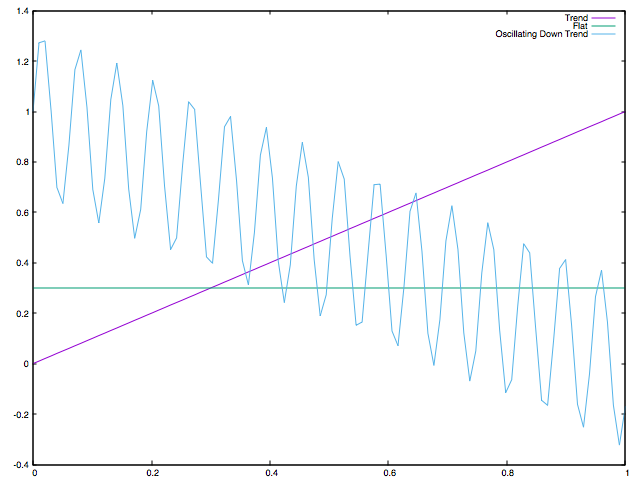

val oscillatingDown = Range.Double(0.0, 1.0, 0.01).flatMap(i => Seq(i, -i + (Math.sin(100*i) / 3) + 1))

Oscillating Down Result: DenseVector(0.0030360075614561453)